The Cache Effect

One of the main drivers for the adoption of Application Performance Monitoring (APM) is the complexity and dynamism of modern applications. Take a simple example - caching.

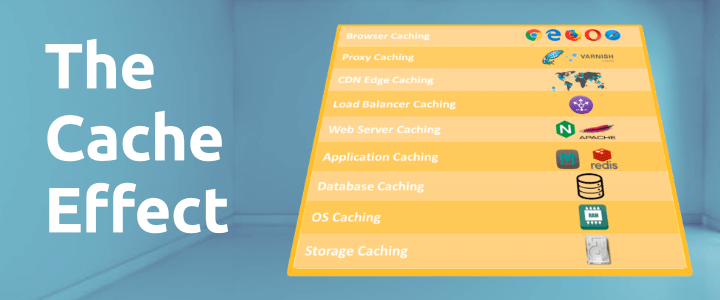

Application developers put in many levels of cache in order to improve performance. But it increases complexity. Imagine you make a web request for a single resource in your web browser. It could be for the HTML of a page, an image or whatever. There is a chance that the browser may already have the resource you are looking for in its cache, in which case you will get an almost instant response. If it is not there then there is a chance that your local proxy server may have it, or your load balancer or web server may have a copy. You may benefit from the fact that other people have asked the same question and you can use their answer. Even your database may have cached part of the answer. It is only in the case where the data is not in any cache does the application have to work it out from first principles. So the use of caching will cause you to get a range of response times for the same request. Which one will you get? You don’t know. On any one occasion you will get a response time somewhere between the best and the worst.

But you probably don’t want just one resource: you want a page and all of its contents. This gives rise to an even greater range of aggregated response-time values. As the number of requests increases, you will tend to get a response time that fits into a well-known distribution, known as the log normal distribution (https://en.wikipedia.org/wiki/Log-normal_distribution). This has a high initial peak with fast response times followed by a long tail that tends towards an infinity.

As an aside, you will sometimes see first-view and repeat-view figures quoted for the response time of a web page. The idea here is to see how the page performs on first viewing where there is nothing in the browser cache (sometimes this situation is forced by clearing the browser cache first) and then how it performs on repeat viewing, when presumably the browser cache is filled. This is valid to a first order but neither of these figures take into account all the other application caches that were mentioned previously. It is nigh on impossible to have control over all caches. It is not practical to flush the cache on every web server and database server (and not to mention third-party applications that you rely on) just to satisfy your quest for repeatable results.

Finally, this is in the situation where all is well. Now imagine superimposing performance slowdowns on this response-time distribution. It would be very difficult to tell on a single sample whether anything is abnormal, since there is so much variation anyway. You would need many samples from many locations, looking at many resources. We aren’t talking about one or two, we are talking about tens or even hundreds. This isn’t a job for a human; this is a job for software. This is where you need APM.